Hey - welcome to this article by the team at neatprompts.com. The world of AI is moving fast. We stay on top of everything and send you the most important stuff daily.

Sign up for our newsletter:

Meta Platforms, the renowned parent company behind Facebook, Instagram, WhatsApp, and Oculus VR, unveiled another technological stride. Following the launch of its innovative voice cloning AI, Audiobox, Meta has now embarked on a pioneering venture in the United States.

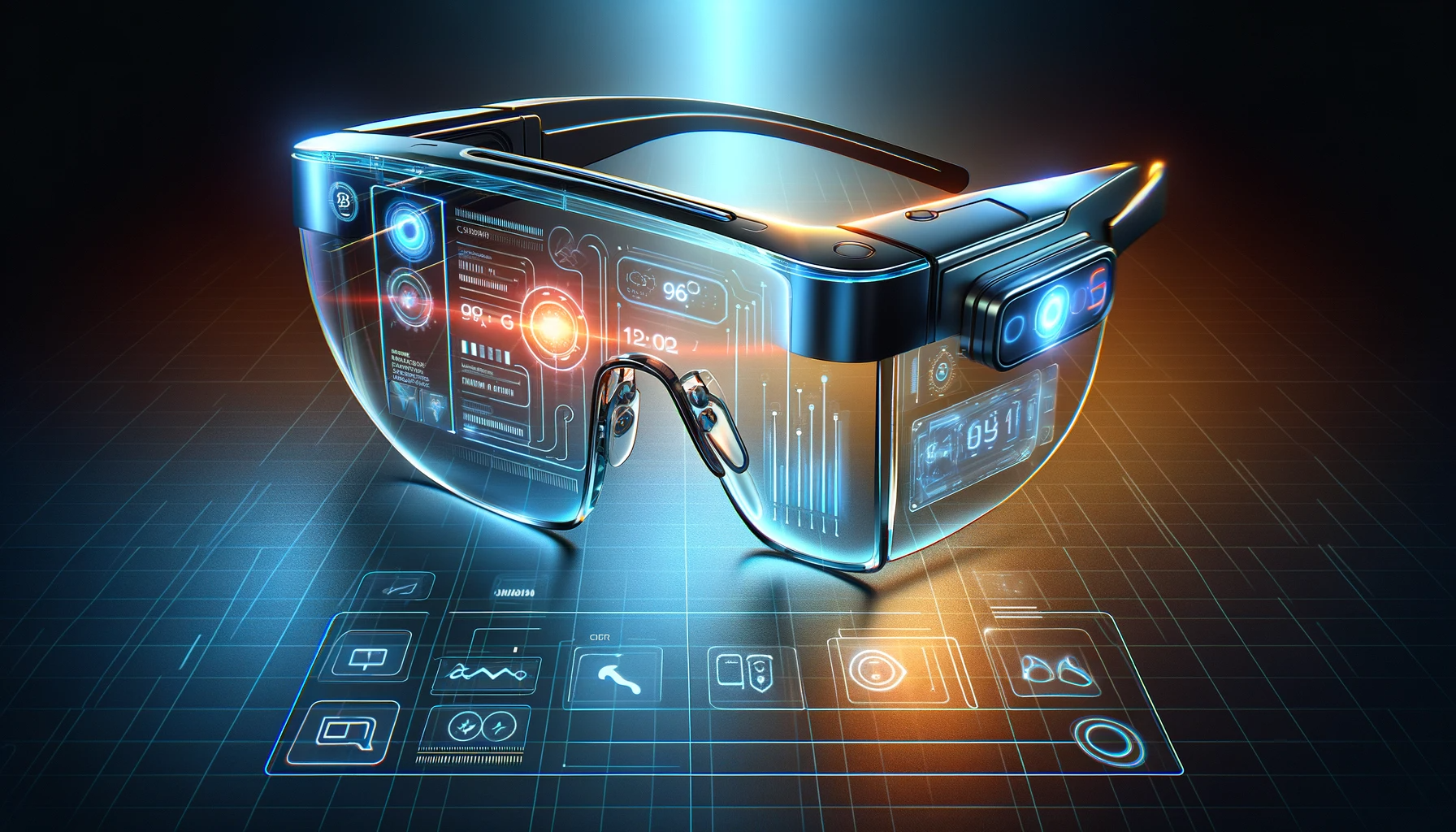

This week marks the initiation of a limited trial for a state-of-the-art multimodal AI. This cutting-edge AI is specifically tailored to enhance the functionality of Ray Ban Meta smart glasses, a product of Meta's collaboration with the iconic eyewear brand Ray-Ban.

This development heralds a new chapter in Meta's journey towards revolutionizing wearable technology.

Meta's Multimodal AI: A New Era in Smart Glasses

The new Meta multimodal AI, which is set for a public launch in 2024, showcases Meta's commitment to innovation. This AI variant isn't just a mere voice assistant; it is designed to understand and interact with the world in a more holistic manner. The AI leverages the camera on the glasses to provide contextual information about the user’s environment, responding to verbal inquiries and visual stimuli.

Early Access and Features

Meta's Chief Technology Officer, Andrew Bosworth, revealed that a beta version of this AI will be tested in the U.S. through an early access program. The AI's capabilities were demonstrated in a video clip where Bosworth, wearing the glasses, looked at a piece of wall art, and the AI identified it as a "wooden sculpture." This feature indicates a leap from the current AI assistant in the glasses, which, although voice-operated, has limited capabilities and doesn't interact with visual elements.

Impact on the Tech Industry and User Experience

This initiative by Meta reflects a broader trend of integrating AI into various products and platforms. It also represents a shift from web-based AI models to incorporating AI into physical devices. While smaller startups have ventured into dedicated AI devices, Meta's move is notable for its scale and integration with a popular consumer product like Ray Ban smart glasses.

Challenges and Future Prospects

As with any pioneering technology, there are challenges and questions. One such challenge is user acceptance, particularly regarding privacy concerns about wearing a camera-equipped device. Meta's initiative brings to mind Google’s earlier attempt with Google Glass, which faced criticisms over fashion sense and practical utility.

Conclusion

Meta's testing of a GPT-4V rival multimodal AI in Ray Ban smart glasses is a bold step towards a more interactive and intuitive future for wearable technology. While it's too early to predict the success of this venture, it certainly sets the stage for exciting developments in the realm of AI-assisted devices. As we await the public launch in 2024, the tech world watches anxiously to see how this multimodal AI will reshape our interaction with technology and the world around us.